Partner solutions catalog

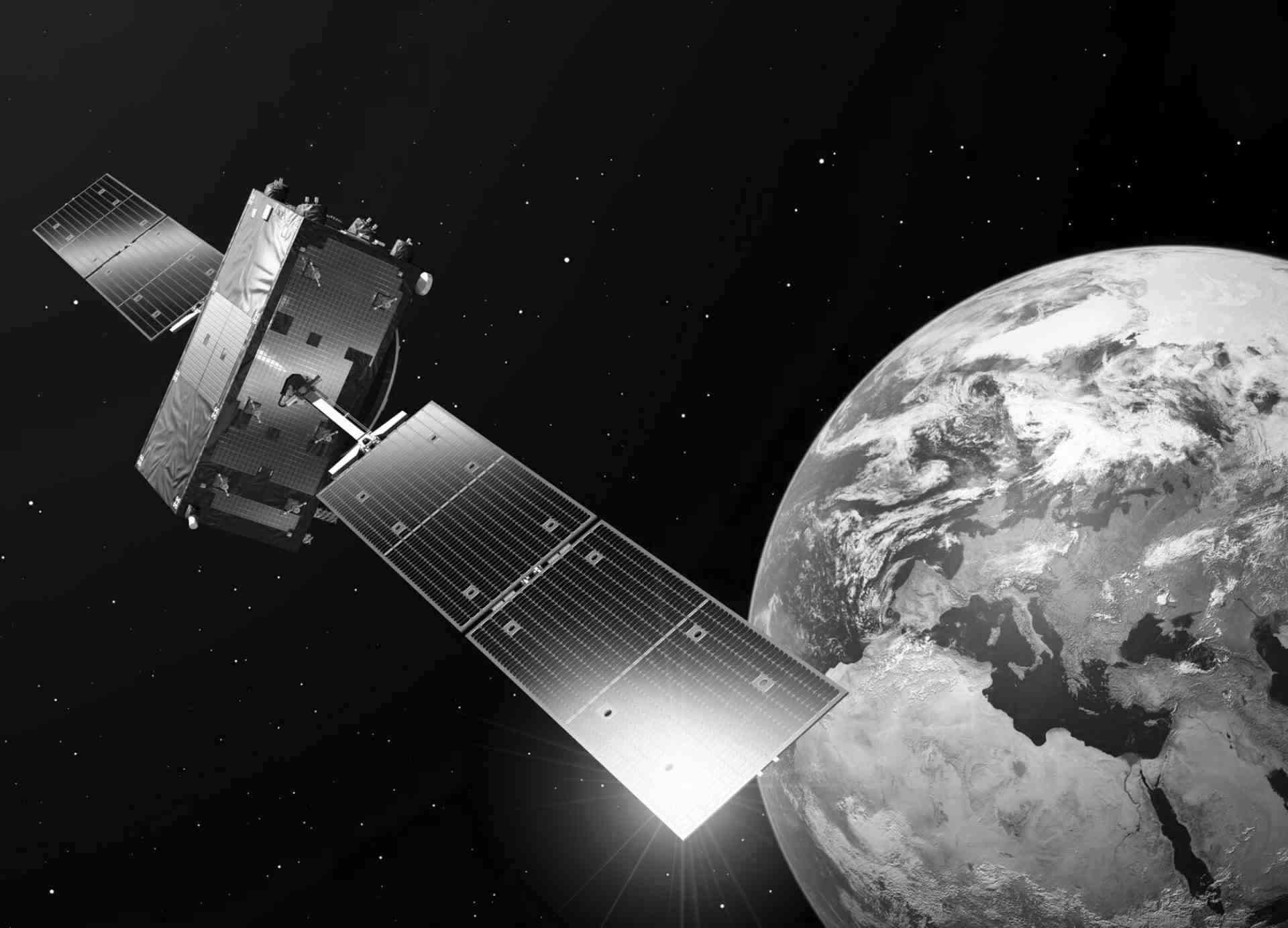

Below is a catalogue of partners, application providers and projects that use the infrastucture and data available on the CREODIAS platform.

CloudFerro S.A.

Operator of CloudFerro Cloud and EODATA Provider in CDSE.

CloudFerro S.A.

CloudFerro is the largest company in the space sector in Poland and a major one in Europe. We have been trusted by leading European firms and scientific institutions from various big-data-processing market sectors, including the European Space Agency (ESA), the European Centre for Medium-Range Weather Forecasts (ECMWF), European Organisation for the Exploitation of Meteorological Satellites (EUMETSAT), German Aerospace Centre (DLR) and many others. CloudFerro is the only Polish company in the space sector that has a status of the prime contractor for the above-mentioned institutions.