Mapping wetlands fire using AI and Sentinel-2 data

Author: Marcin Kluczek, Data Scientist at CloudFerro

Advances in artificial intelligence (AI) and satellite remote sensing are opening new possibilities for monitoring and protecting vulnerable ecosystems. Wetlands are critical ecosystems that store significant amounts of carbon, regulate hydrological cycles, and provide habitats for numerous species (Turetsky et al., 2015). Over centuries, human activities primarily drainage for agriculture have led to a dramatic reduction of wetland areas worldwide, making their conservation increasingly crucial.

Fires pose a severe threat to wetlands, particularly at the beginning or end of the vegetation season when plant material becomes dry and highly flammable. Wetland fires significantly impact bird populations by reducing available habitats, especially for rare and endangered species (Walesiak et al., 2022). They also decrease ecosystem biodiversity by promoting the spread of invasive plant species at the expense of rare wetland species (Sulwiński et al., 2020).

One of Europe's largest wetland complexes, the Biebrza Wetlands in northeastern Poland, forms the Biebrza National Park and is protected under the Ramsar Convention. On April 20, 2025, a fire broke out in the area. Due to the challenging wetland terrain and a lack of accessible roads, firefighting operations were difficult to conduct. In such cases, access to up-to-date satellite imagery is crucial for monitoring fire extent and supporting operational decision-making.

To support post-fire assessment in this hard-to-access area, we combined up-to-date Sentinel-2 imagery obtained from the CREODIAS service with a state-of-the-art AI segmentation approach. Instead of relying solely on manual inspection or threshold-based methods, we applied the Segment Anything Model (SAM) (Kirillov et al., 2023), a foundation model capable of segmenting image regions based on multimodal input.

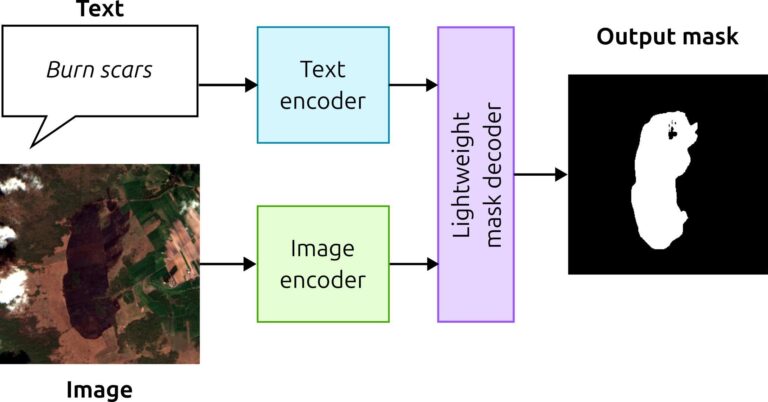

SAM utilizes a dual-encoder architecture, consisting of a text encoder and an image encoder, which jointly process the inputs. In this study, Sentinel-2 imagery was clipped to the area of interest, then preprocessed and normalized to ensure compatibility with the model’s requirements.

We used the SamGeo implementation of SAM model (Wu and Osco, 2023), then prompting the model with a simple text query: “burn scars”. The image and text inputs were processed through their respective encoders, resulting in a binary mask that delineated the fire-affected area. This AI-driven segmentation enabled rapid, reproducible, and scalable mapping of burn scars without the need for manual annotation or prior local training. Based on the generated mask, spatial calculations were performed to estimate the extent of the burned area.

The fire affected approximately 1.83 km² of the Biebrza Wetlands, with estimated dimensions of about 2.5 km by 1.2 km. While the burned area was smaller compared to the extensive fire in 2020, which covered approximately 55 km², the recurrence and increasing frequency of fires in the region are causes for concern. Even small-scale fires contribute to habitat degradation, a decline in biodiversity, and long-term ecosystem impoverishment. Continuous monitoring using advanced satellite data and AI-driven methods is essential for the effective management and conservation of vulnerable ecosystems.

Part I - preprocess image

Import libraries

import rasterio

import numpy as np

from rasterio.mask import mask

import geopandas as gpd

from shapely.geometry import box

import osSet environment variables

os.environ['AWS_S3_ENDPOINT'] = "eodata.cloudferro.com"

os.environ['AWS_ACCESS_KEY_ID'] = "<INSERT YOUR PUBLIC KEY>"

os.environ['AWS_SECRET_ACCESS_KEY'] = "<INSERT YOUR PRIVATE KEY>"

os.environ['AWS_HTTPS'] = "YES"

os.environ['AWS_VIRTUAL_HOSTING'] = "FALSE"

os.environ['GDAL_HTTP_TCP_KEEPALIVE'] = "YES"

os.environ['GDAL_HTTP_UNSAFESSL'] = "YES"

os.environ["GDAL_HTTP_MAX_RETRY"] = "5"

os.environ["GDAL_HTTP_RETRY_DELAY"] = "30"

os.environ["GDAL_HTTP_MAX_CONNECTIONS"] = "10"Define Input and Output Paths

paths = [

's3://EODATA/Sentinel-2/MSI/L2A/2025/04/23/S2C_MSIL2A_20250423T094051_N0511_R036_T34UFE_20250423T151018.SAFE/GRANULE/L2A_T34UFE_A003295_20250423T094446/IMG_DATA/R10m/T34UFE_20250423T094051_B02_10m.jp2',

's3://EODATA/Sentinel-2/MSI/L2A/2025/04/23/S2C_MSIL2A_20250423T094051_N0511_R036_T34UFE_20250423T151018.SAFE/GRANULE/L2A_T34UFE_A003295_20250423T094446/IMG_DATA/R10m/T34UFE_20250423T094051_B03_10m.jp2',

's3://EODATA/Sentinel-2/MSI/L2A/2025/04/23/S2C_MSIL2A_20250423T094051_N0511_R036_T34UFE_20250423T151018.SAFE/GRANULE/L2A_T34UFE_A003295_20250423T094446/IMG_DATA/R10m/T34UFE_20250423T094051_B04_10m.jp2',

]

clipped_output_path = 'RGB_burn_scars.tif'Define and Create Bounding Box

bbox = [622908.376, 5941055.448, 626316.237, 5944113.115]

min_x, min_y, max_x, max_y = bbox

polygon = box(min_x, min_y, max_x, max_y)

gdf = gpd.GeoDataFrame({"geometry": [polygon]}, crs="EPSG:32634")Clip and Process Sentinel-2 Bands

with rasterio.open(paths[0]) as b02, rasterio.open(paths[1]) as b03, rasterio.open(paths[2]) as b04:

raster_crs = b02.crs

if gdf.crs != raster_crs:

gdf = gdf.to_crs(raster_crs)

clipped_bands = []

out_transform = None

for src in [b04, b03, b02]:

out_image, out_transform = mask(src, gdf.geometry, crop=True, nodata=0)

clipped_bands.append(out_image[0])

out_image = np.stack(clipped_bands, axis=0)

out_image = out_image.astype(np.float32)

out_image = np.clip(out_image, 0, None)

out_image -= out_image.min(axis=(1, 2), keepdims=True)

out_image /= out_image.max(axis=(1, 2), keepdims=True) + 1e-10

out_image *= 255

out_image = out_image.astype(np.uint8)

with rasterio.open(

clipped_output_path,

'w',

driver='GTiff',

height=out_image.shape[1],

width=out_image.shape[2],

count=3,

dtype=out_image.dtype,

crs=raster_crs,

transform=out_transform,

nodata=0

) as dst:

dst.write(out_image)Validate Output

if os.path.exists(clipped_output_path):

print(f"Clipped and converted RGB image saved to: {clipped_output_path}")

else:

print("Failed to save the image.")

with rasterio.open(clipped_output_path) as src:

print(f"Output shape: {src.shape}")

print(f"Output bands: {src.count}")

print(f"Output CRS: {src.crs}")

print(f"Output bounds: {src.bounds}")Part II - detect burn scars

Import Required Module

from samgeo.text_sam import LangSAMDefine Parameters

bbox = [622908.376, 5941055.448, 626316.237, 5944113.115]

image = "RGB_burn_scars.tif"

text_prompt = "burn scars"Perform Segmentation

sam = LangSAM()

sam.predict(

image=image,

text_prompt=text_prompt,

box_threshold=0.24,

text_threshold=0.24

)Visualization

sam.show_anns(

cmap="Greys_r",

add_boxes=False,

alpha=1,

title="Automatic Segmentation of Trees",

blend=False,

output=output_raster

)

sam.show_anns(

cmap="Greens",

add_boxes=False,

alpha=0.5,

title="Automatic Segmentation of Trees"

)Save Results and Calculate Area

output_raster = "burnscars.tif"

output_vector = "burnscars.gpkg"

sam.raster_to_vector(output_raster, output_vector)

gdf = gpd.read_file(output_vector)

if gdf.crs.is_geographic:

raise ValueError("The CRS is geographic (lat/lon). Please reproject to a projected CRS (e.g., UTM) for accurate area calculations.")

gdf['area_m2'] = gdf.geometry.area

total_area_km2 = gdf['area_m2'].sum() / 1_000_000

print(f"Total area of segmented features: {total_area_km2:.2f} km²")References

Turetsky, M., Benscoter, B., Page, S., et al. 2015. Global vulnerability of peatlands to fire and carbon loss. Nature Geoscience, 8, 11–14. https://doi.org/10.1038/ngeo2325.

Walesiak, M., Mikusiński, G., Borowski, Z., et al. 2022. Large fire initially reduces bird diversity in Poland’s largest wetland biodiversity hotspot. Biodiversity and Conservation, 31, 1037–1056. https://doi.org/10.1007/s10531-022-02376-y.

Sulwiński, M., Mętrak, M., Wilk, M., & Suska-Malawska, M. 2020. Smouldering fire in a nutrient-limited wetland ecosystem: Long-lasting changes in water and soil chemistry facilitate shrub expansion into a drained burned fen. Science of The Total Environment, 746, 141142. https://doi.org/10.1016/j.scitotenv.2020.141142.

Kirillov A., et al., 2023. Segment Anything, 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2023, pp. 3992-4003, doi: 10.1109/ICCV51070.2023.00371.

Wu, Q., & Osco, L. 2023. samgeo: A Python package for segmenting geospatial data with the Segment Anything Model (SAM). Journal of Open Source Software, 8(89), 5663. https://doi.org/10.21105/joss.05663.